Self-driving cars. Optimized healthcare. Digital personal assistants that know your next purchase before you even make it. In the past decade, machine learning has revolutionized the way in which we interact with our world. As with any technology, its application is bounded not just by human ingenuity, but also human intent. For instance, artificial intelligence has made possible the advent of precision medicine, targeting specific treatments based on a patient’s genetic makeup, medical history, and lifestyle data, and potentially saving thousands of lives. On the other hand, such technology has been used with less virtuous goals in mind. Recall Cambridge Analytica’s attempts to sway the 2016 election with data from Facebook. At the end of the day, machine learning is a tool. When used wisely, this technology can shape our world for the better.

Here in the Center for Conservation Innovation at Defenders, we wondered if and how we can leverage machine learning to protect wildlife and conserve the habitat on which endangered species depend. We believe machine learning may be useful for environmental compliance. The efficacy of environmental laws, such as the Clean Air Act, the Clean Water Act, and the Endangered Species Act (ESA), depends on our ability to enforce them. Although Congress has established agencies to this end, not enough resources are allocated to detect, let alone pursue, every violation of environmental laws. That’s where environmental NGOs step in.

But, the burden of monitoring each species has become more and more costly, especially in recent years. As the number of threatened species has increased, the amount of federal resources allocated for the maintenance of the Endangered Species Act has stagnated (see Figure 1).

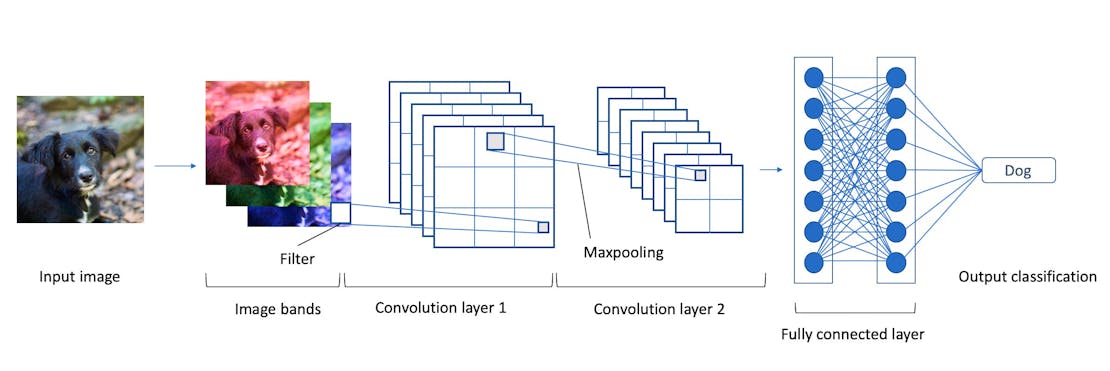

In hopes of combating this challenge, Defenders is creating convolutional neural networks (CNNs), a type of machine learning often used for image classification, to better monitor the habitat of endangered species. These networks make use of the same algorithms in facial recognition technology. The basic framework of a CNN consists of an input layer (the image), an output layer (the classification), and multiple hidden layers that are connected to each other like the synapses of neurons. The hidden layers incorporate a stack of three modules, each performing a different mathematical operation. The most important is the convolution layer, which convolves a filter with specific parameters across each band of the image – red, green, and blue – by taking the product of the input and the filter. Through convolving, the layer extracts a mathematical representation of the image. Just as the neurons in our brain can learn to distinguish one feature from another, so too can a neural network. Through hundreds of thousands of training runs, the hidden layers update their parameters to recognize the target feature.

Instead of figuring out where faces are in a selfie, we’re training CNNs to detect and map near real-time alterations to landscapes that can be seen in satellite images. For example, here is a CNN trained to find ground-mounted solar panel arrays at landscape scale, where the model has outlined the panels. These results can then be used to update maps of the locations of large solar arrays across the landscape. With these models, we can remotely determine if construction is occurring in the habitat of an endangered species without ever having to leave the office.

Transferring machine learning to satellite imagery comes with its own challenges. Whereas photographs have only three bands, depending on the satellite sensor, satellite imagery may have a dozen or even hundreds of bands. Adapting CNNs from a typical image to multispectral satellite imagery presents a welcome challenge because it could substantially change the way that conservation laws are enforced, helping us to save wildlife.

Although we’re a long way away from replacing physical monitoring altogether, we hope that our work will make more regular monitoring for environmental compliance possible. By reducing the cost of monitoring we can keep tabs on more species and habitats, and we may be able to allocate more resources to species recovery, habitat restoration, and other areas vital for wildlife conservation.